Why Gateway API

Limitations of ingress

what are the limitations of ingress?

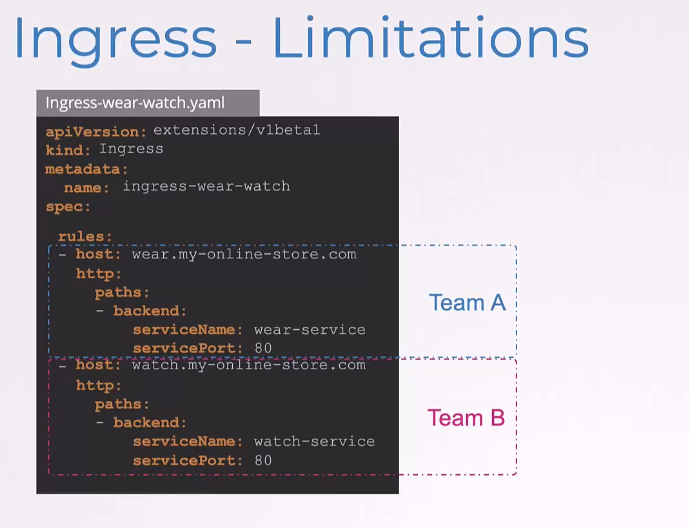

sEarlier, when we spoke about Ingress,

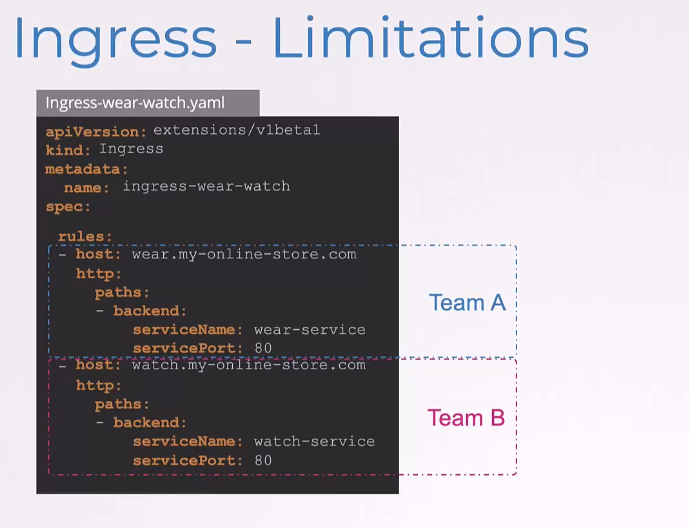

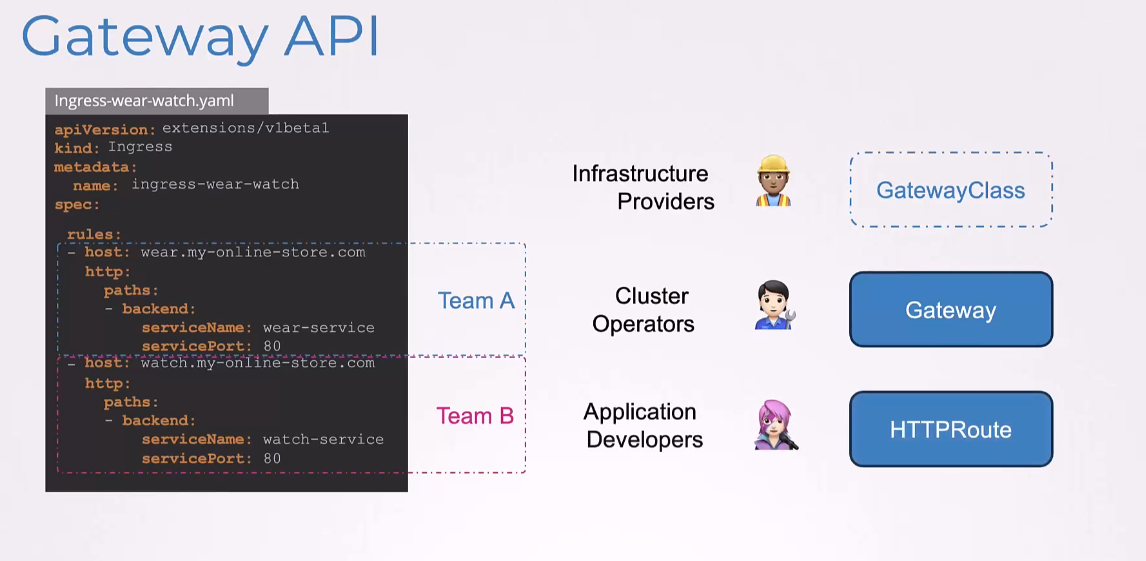

we spoke about two services, sharing the same Ingress resource.

What if each service was managed by different teams, or even completely different organizations or businesses?

What if the wear service was managed by team A and the video service was managed by Team B?

In such case, the underlying Ingress resource is still a single resource, which can only be managed by one team at a time.

So, in a multi-tenant environment, Ingress can pose a challenge.

They would need to coordinate between handling the same Ingress resource and might lead to conflicts.

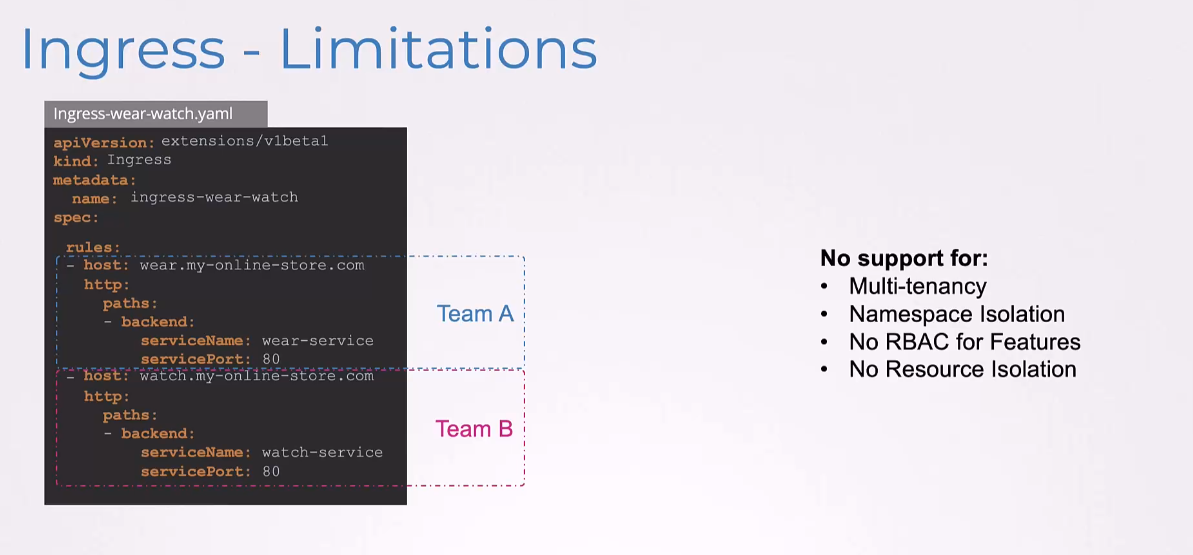

Ingress lacks sufficient support for multi-tenancy.

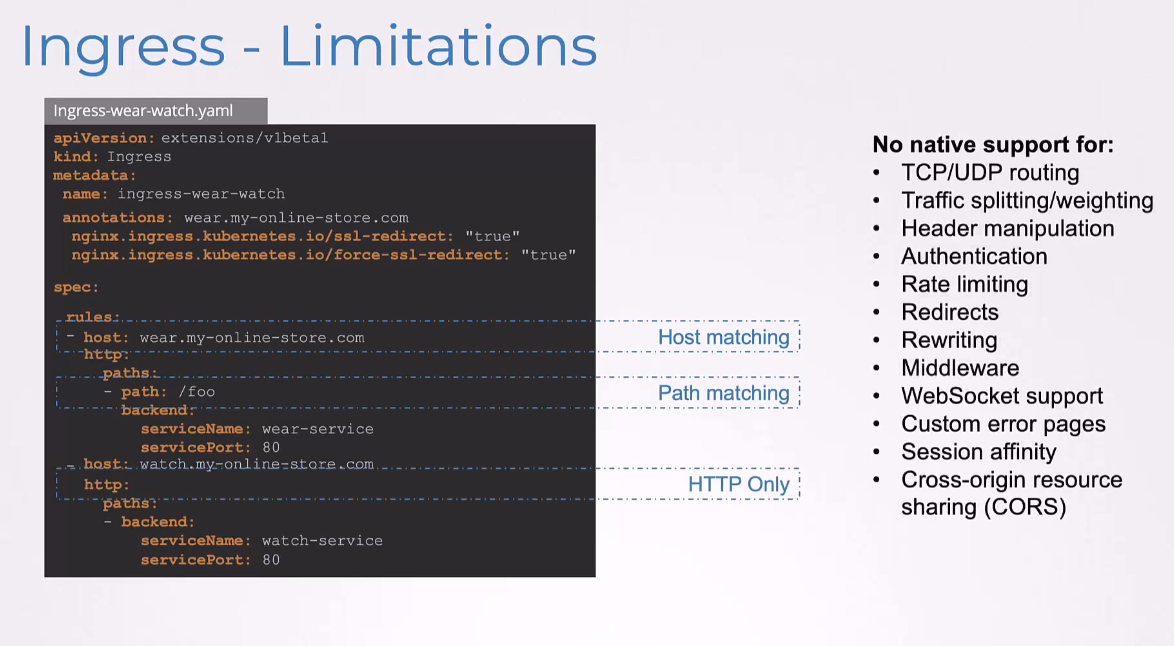

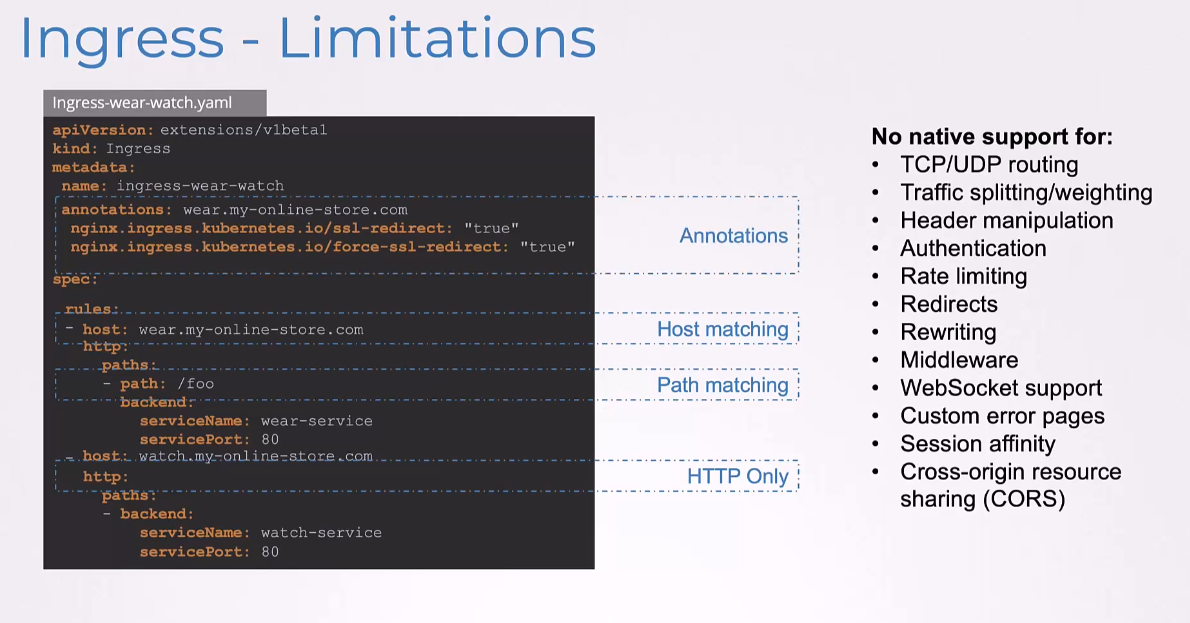

Another limitation are the options for rules configuration.

Ingress only supports a http-based rules, such as host matching or path matching.

Ingress only supports a http-based rules, such as host matching or path matching.

Others like TCP/UDP routing, traffic splitting, header manipulation, authentication, rate limiting and others aren’t currently supported.

These are all configured by the controllers.

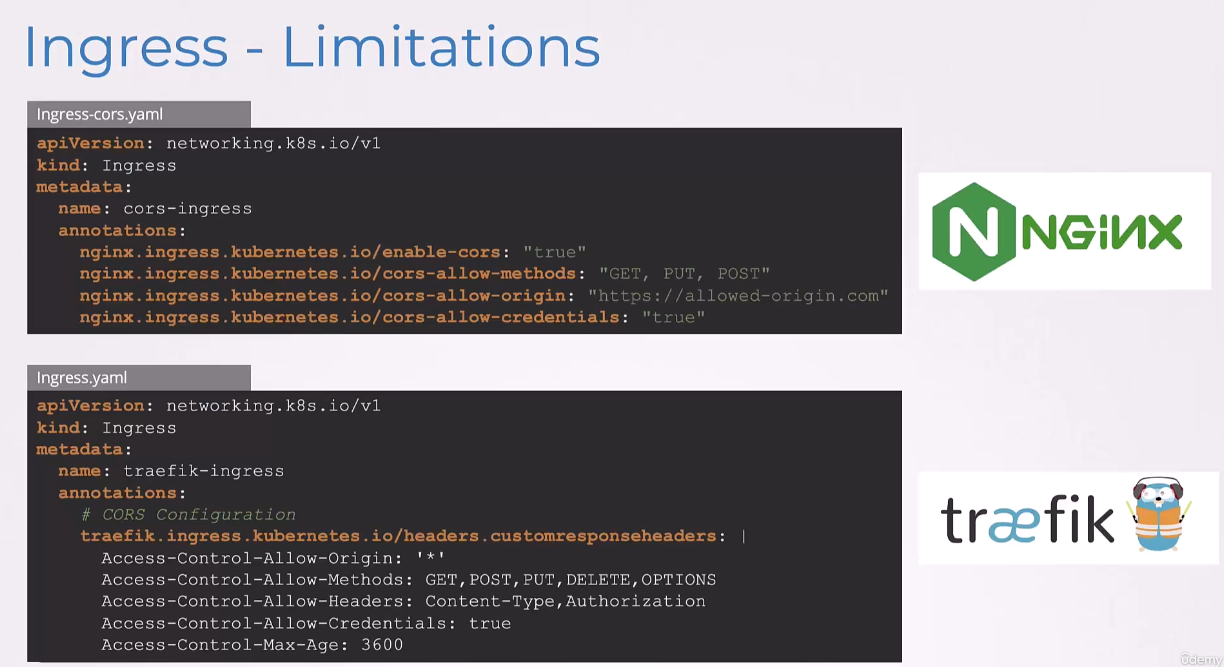

And these configurations are passed through to the controllers using annotations like this.

And these configurations are passed through to the controllers using annotations like this.

So, here, you can see some NGINX configurations passed through,

such as SSL, redirect, et cetera.

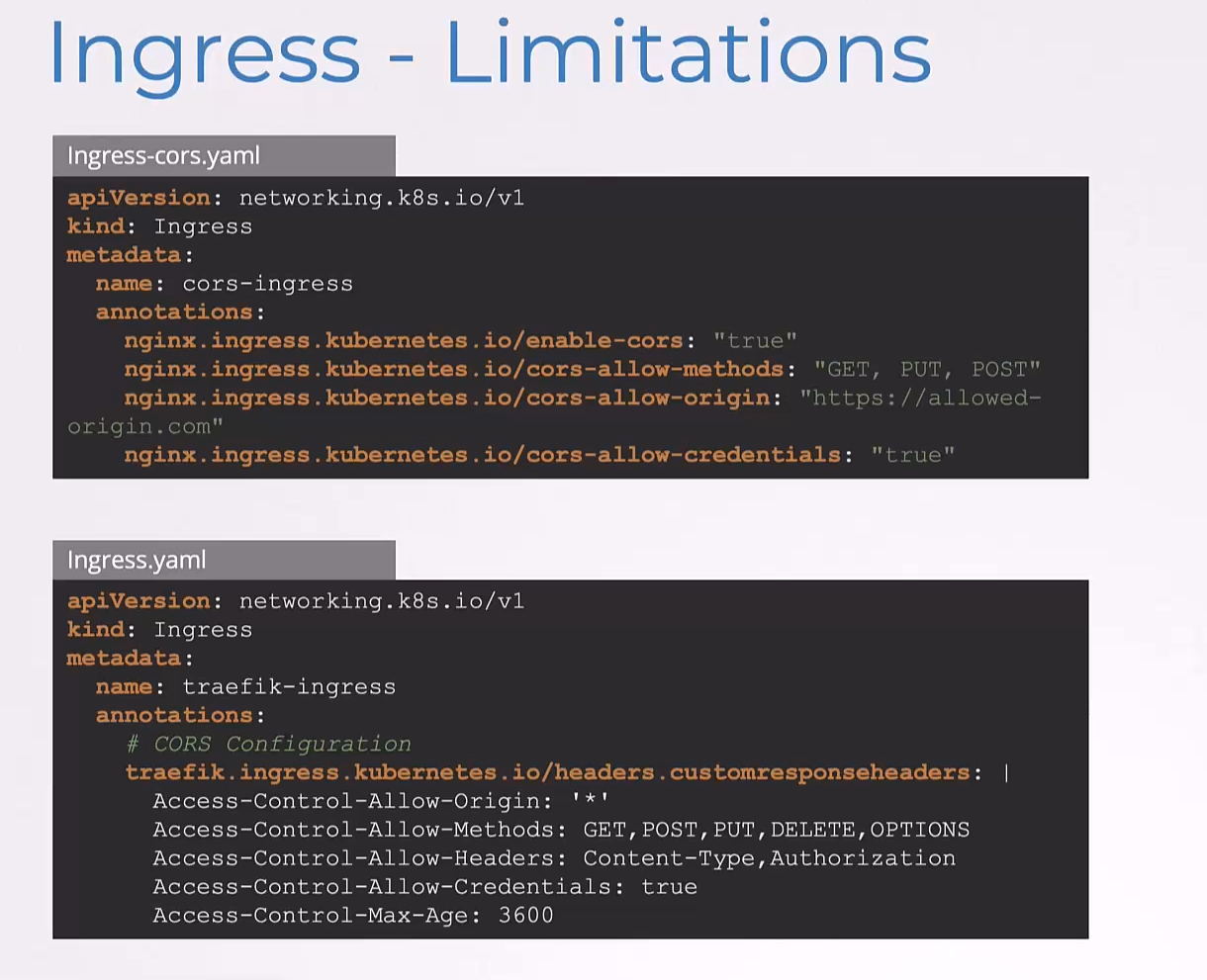

As such, you’ll see real complex annotations specified in different Ingress rules, such as to configure CORS,

As such, you’ll see real complex annotations specified in different Ingress rules, such as to configure CORS,

we have some NGINX specification configuration here.

And in the second example, we’re using a Traefik controller.

And so, we have some Traefik-related configuration here.

The challenge here as you can see,

is that these configurations are very specific

to the underlying controllers,

NGINX and Traefik respectively.

And Kubernetes itself is not aware of these settings.

So, it can’t validate if they’re right or wrong.

These configuration are merely passed to the underlying controllers.

So, we have different configurations for different controllers for the same use case.

And these configurations can now only be used with these specific controllers.

And that’s where Gateway APIs come in.

And that’s where Gateway APIs come in.

What is Gateway API

Gateway API is an official Kubernetes project focused

on layer four and layer-seven routing.

This project represents the next generation

of Kubernetes Ingress load balancing and service mesh APIs.

of Kubernetes Ingress load balancing and service mesh APIs.

Now, one of the challenges we discussed

with Ingress was the lack of support for multi-tenancy.

So, we discussed

that with different teams accessing the same infrastructure,

how do you provide the flexibility needed by the users, while maintaining control by the owners of the infrastructure?

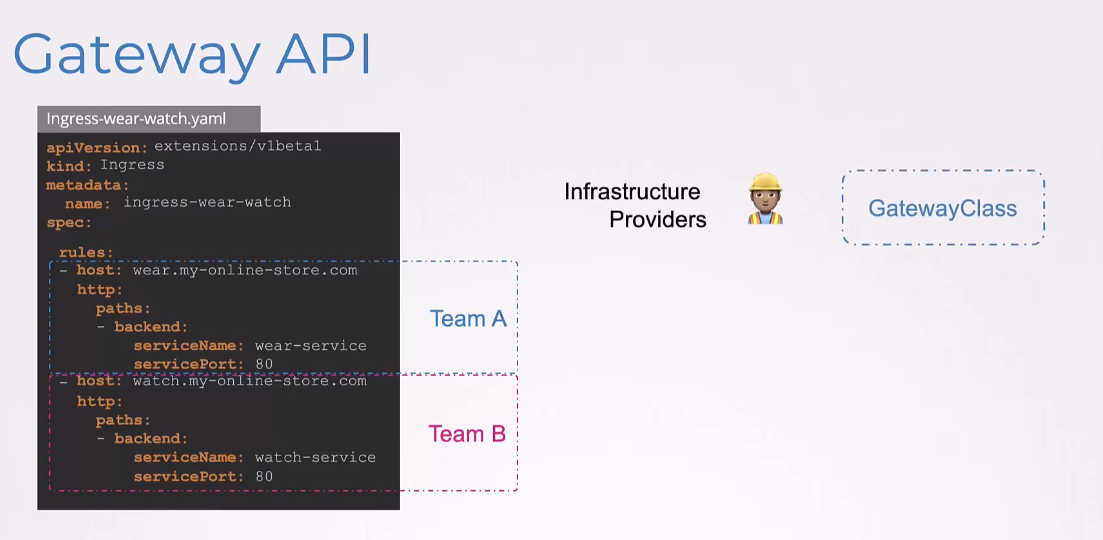

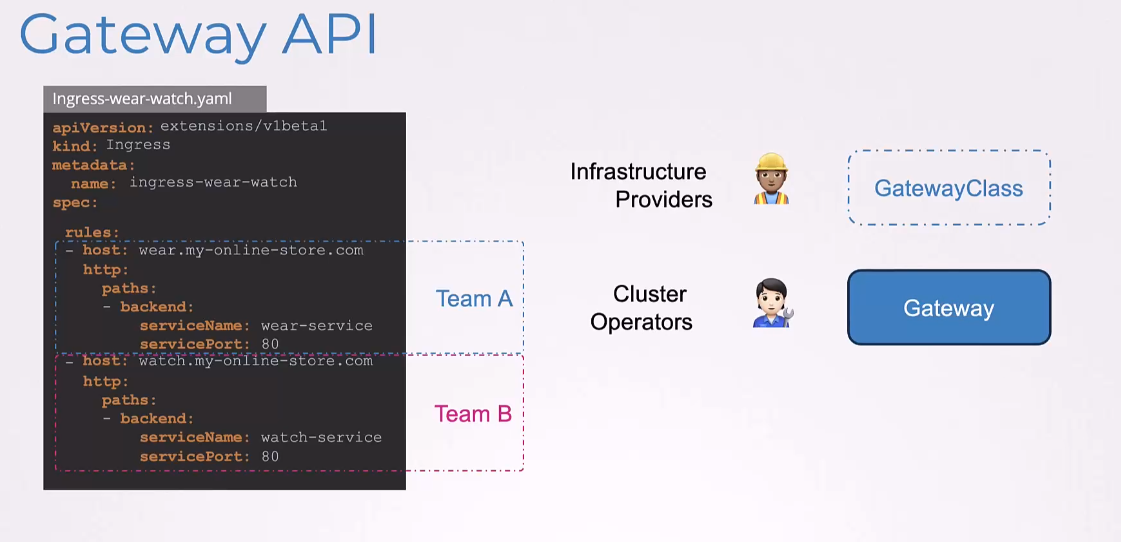

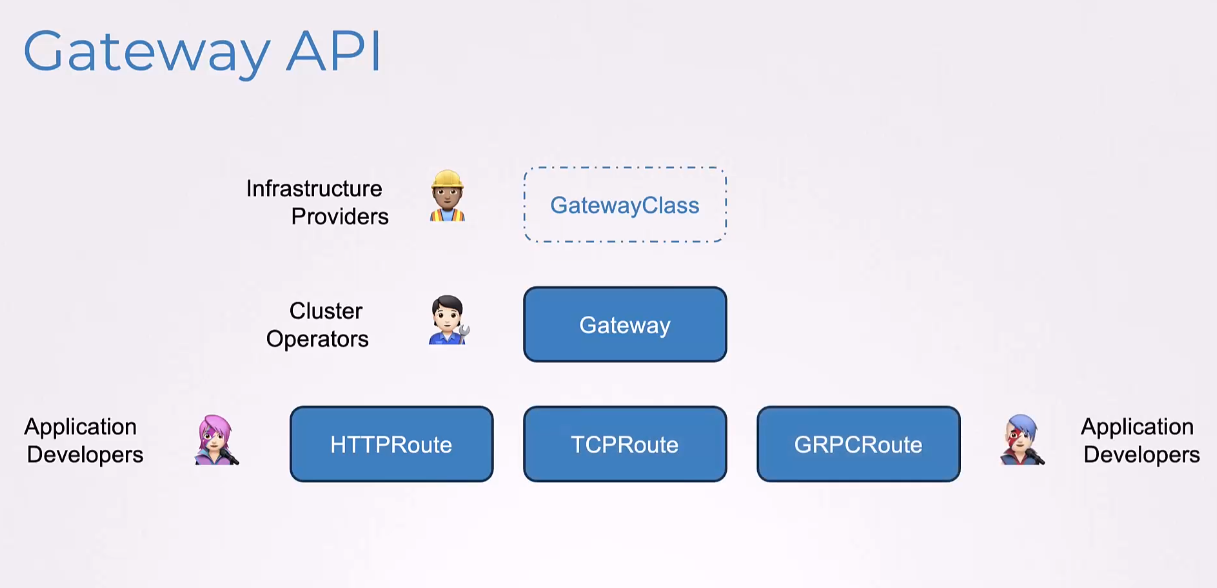

This is where Gateway API introduces three separate objects that are managed by three separate personas.

The infrastructure providers configure the GatewayClass.

The infrastructure providers configure the GatewayClass.

The GatewayClass defines

what the underlying network infrastructure would be,

such as the NGINX, Traefik or other load balancers.

The cluster operators would then configure the gateway,

The cluster operators would then configure the gateway,

which are instances of the GatewayClass.

And then, we have the HTTPRoutes created

And then, we have the HTTPRoutes created

by the application developers.

Now, unlike Ingress where we only had HTTPRoutes,

Now, unlike Ingress where we only had HTTPRoutes,

here, we have TCPRoute and GRPCRoute and others.

So, let’s look at how each of these are created.

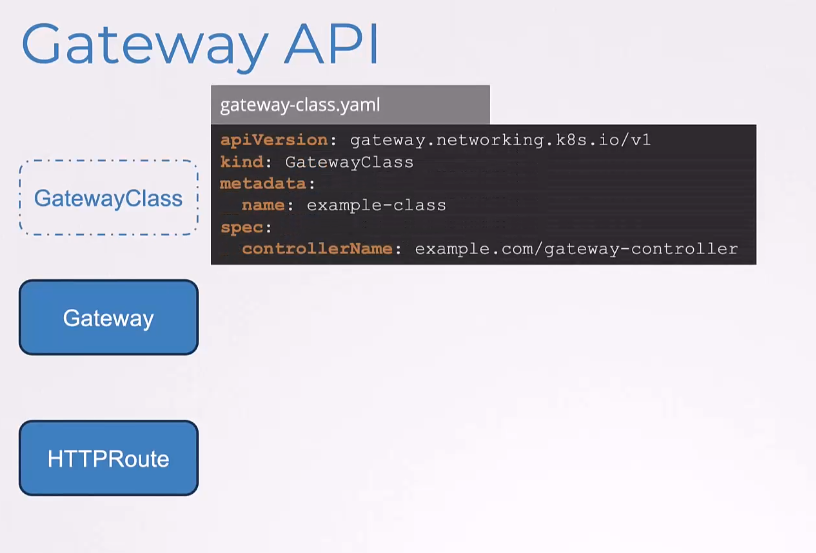

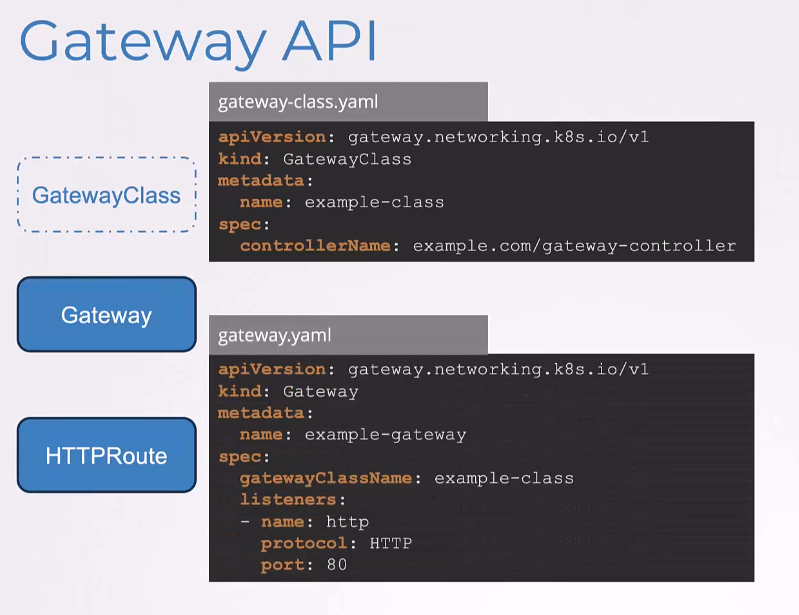

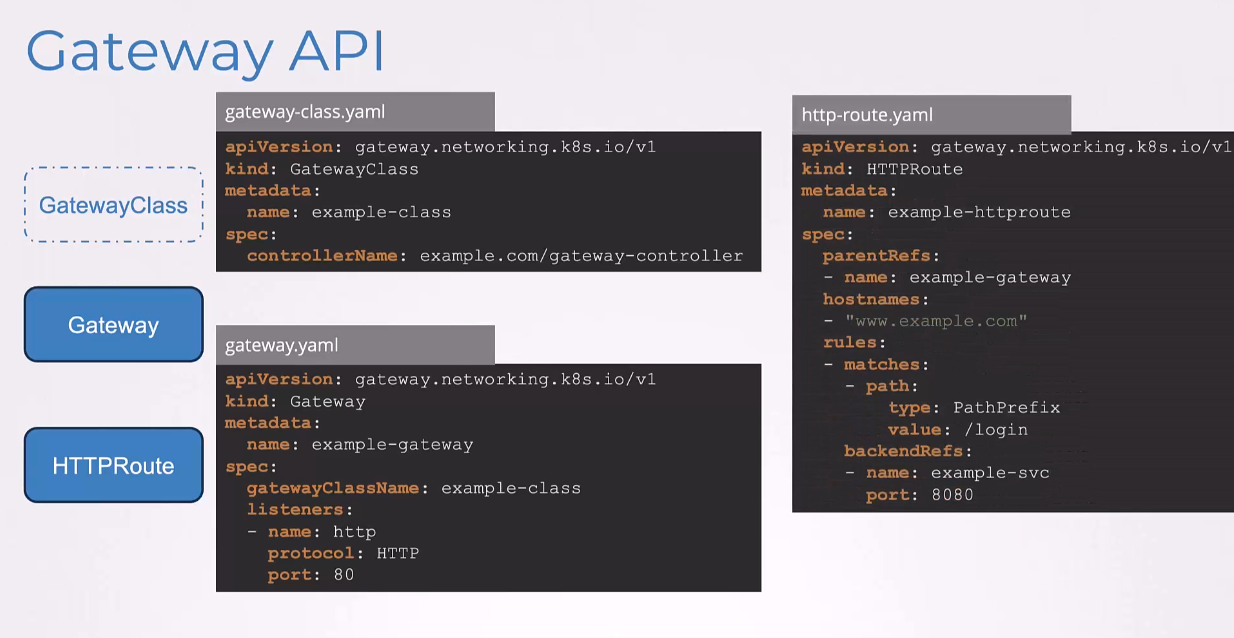

First, we have the GatewayClass.

It has the API version, gateway.networking.k8s.io/v1,

It has the API version, gateway.networking.k8s.io/v1,

the kind is GatewayClass,

the name is example-class

and the controller name is gateway-controller.

Now, remember that like Ingress,

we must also deploy a controller for gateway.

We will talk about that in a bit.

But note

that that name is what you put here under controller name.

Next, we have gateway object.

Next, we have gateway object.

It has the API version set

to gateway.networking.k8s.io/v1,

kind is Gateway, name is example-gateway

and it has the GatewayClass specified,

which is the GatewayClass we created above.

And then, we have the HTTPListeners configured

with the name http and port 80.

And finally, we have the HTTPRoute rule,

And finally, we have the HTTPRoute rule,

which is again, gateway.networking.k8s.io/v1

as the API version,

kind is HTTPRoute, name is example-httproute,

ParentRefs is the example-gateway we defined.

It matches requests coming in

with the host name, www.example.com.

And a rule is configured that has a path login.

And the backend service it refers to is the example service,

which is the service on the cluster.

In this example, the HTTP traffic

from gateway example-gateway with the host header set

and the request path specified as login will be routed

to the service example-svc on port 8080.

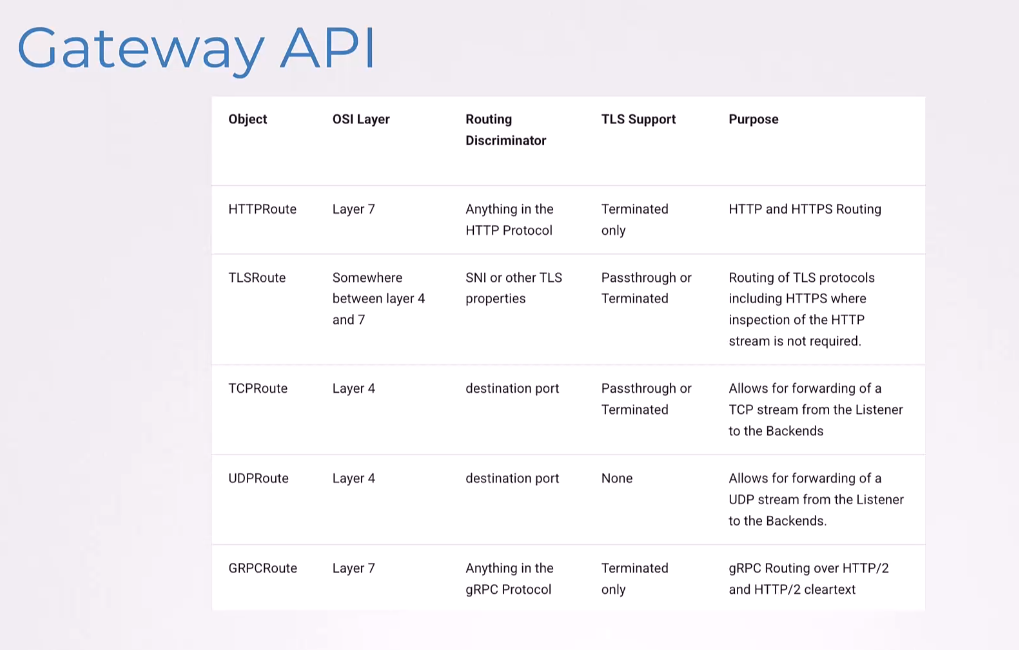

So, the HTTPRoute that we saw is a layer-seven protocol.

So, the HTTPRoute that we saw is a layer-seven protocol.

Some of the additional route options available,

are TLSRoute, TCPRoute, UDPRoute, GRPCRoute, et cetera.

So, back to a few limitations that we saw with Ingress,

So, back to a few limitations that we saw with Ingress,

let’s see how those are configured in Gateway API.

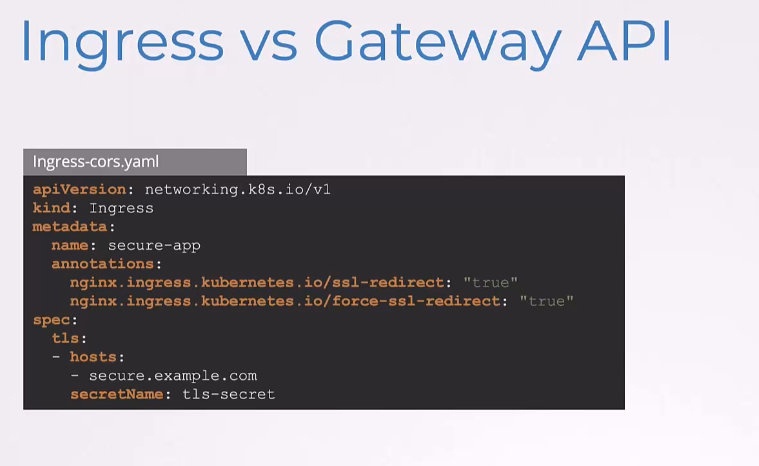

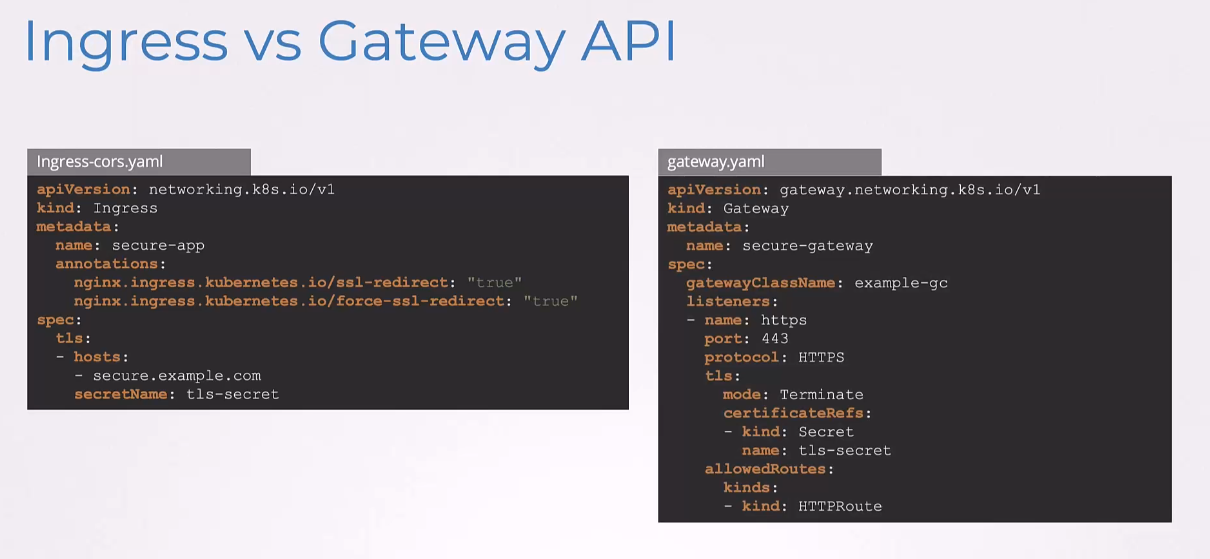

So, earlier, we saw that with Ingress,

the basic TLS configuration goes

in the spec.tls section right here.

And then, this is the native Ingress way.

But to ensure all traffic uses HTTPS,

well, we wanna redirect HTTP to HTTPS.

We need to use the NGINX-specific annotation.

So, these annotations won’t work

with other Ingress controllers.

So, the Gateway API approach,

So, the Gateway API approach,

is much more declarative and structured.

Everything is defined in the proper spec,

no annotations needed.

The listeners section,

clearly shows we are setting up an HTTPS endpoint

on port 443.

The TLS configuration is explicit with the mode terminate,

showing we are terminating TLS at the gateway.

The certificateRefs directly reference our tls-secret,

allowedRoutes specifies which kinds of routes can attach

to this listener, in this case, HTTPRoutes.

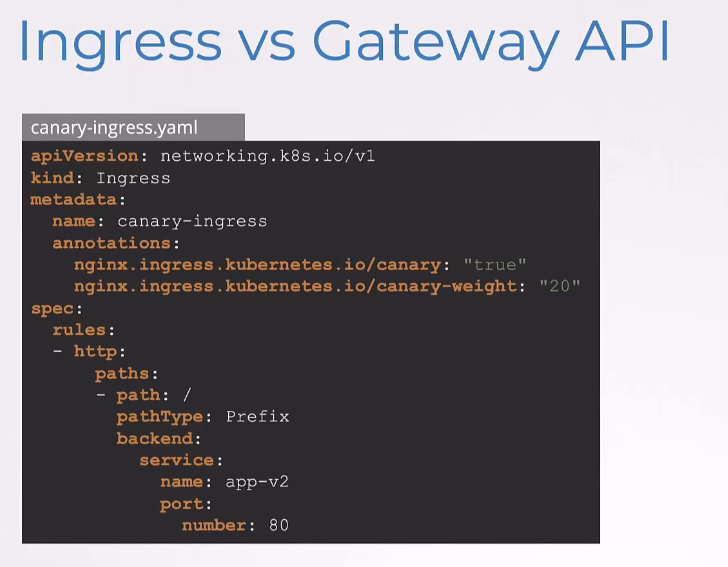

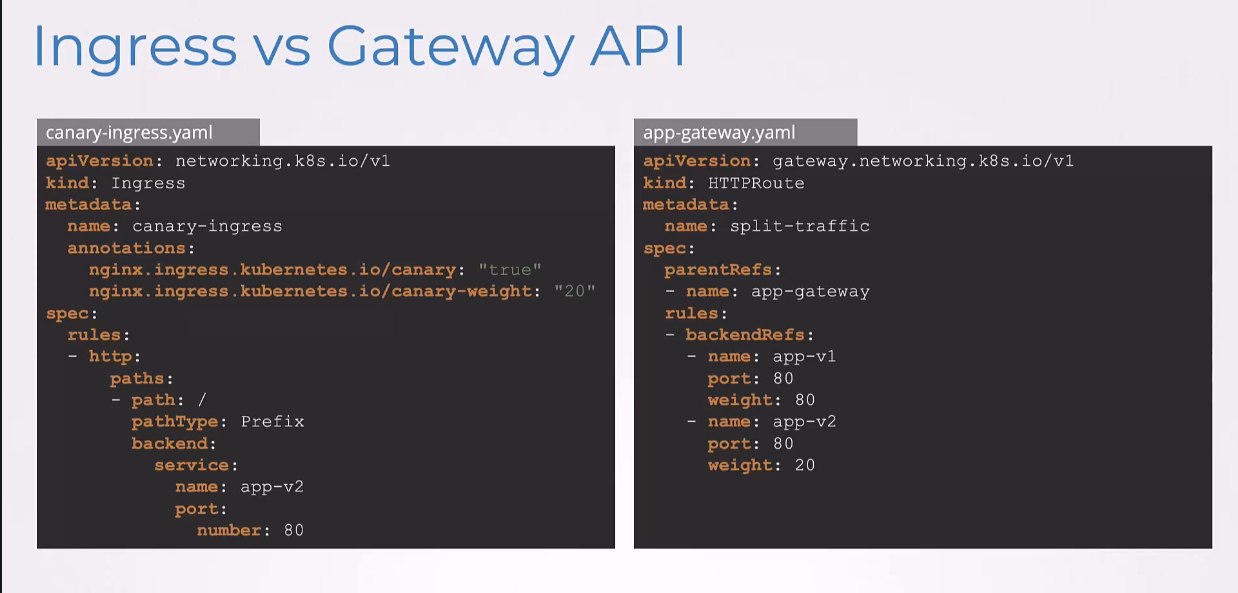

Here’s another example.

Here’s another example.

Through NGINX-specific annotations, we are saying,

“Hey, this is a canary deployment

and send 20% of the traffic here.”

The remaining 80% of traffic automatically goes

to the primary Ingress,

which is another Ingress service that may exist.

But this isn’t obvious

from looking at this configuration alone.

And it only works with NGINX.

Other controllers might not understand these annotations,

because these are specific to NGINX.

So, the Gateway API tells the complete story

So, the Gateway API tells the complete story

in one clear configuration.

So, everything is visible in one place,

no hidden primary configuration needed.

We can see both services at v1 and v2 right there

in the backendRefs section.

The traffic split is explicitly defined,

80% goes to v1, 20% goes to v2.

No annotations needed, this is a native feature.

So, this will work the same way

across any Gateway API implementation.

Now, these configurations work with any controller

and these are not specific to Ingress or anything.

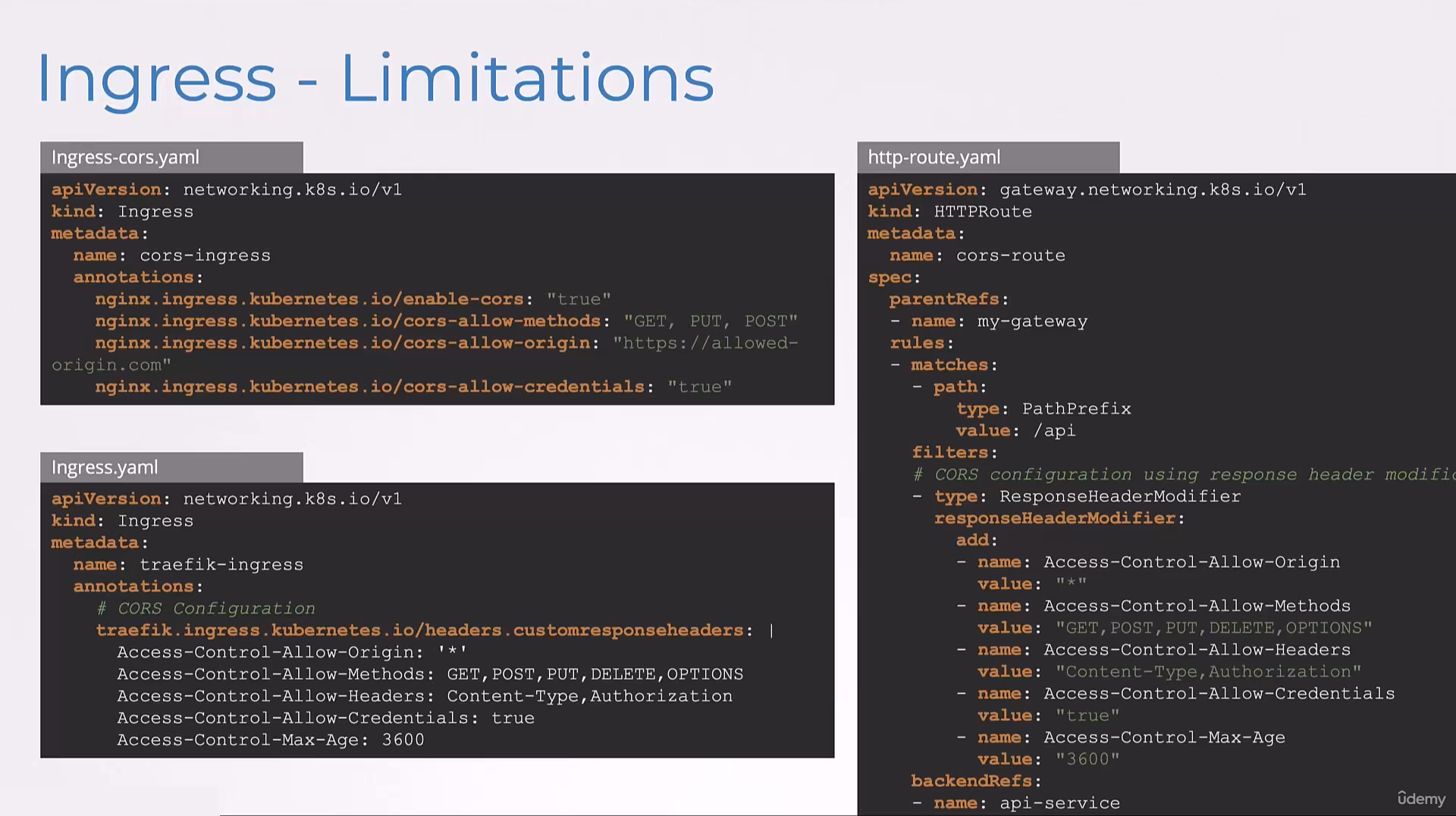

Here’s yet another example.

Here’s yet another example.

So, earlier,

we saw the complex controller-specific annotations needed

for advanced configurations like CORS setting.

Now, with Gateway API, we can configure these centrally,

Now, with Gateway API, we can configure these centrally,

no annotations needed.

Everything is defined in this spec.

CORS headers are explicitly defined,

using the responseHeaderModifier filter.

The configuration is more readable and self-documenting.

And this will work consistently

across any Gateway API implementation.

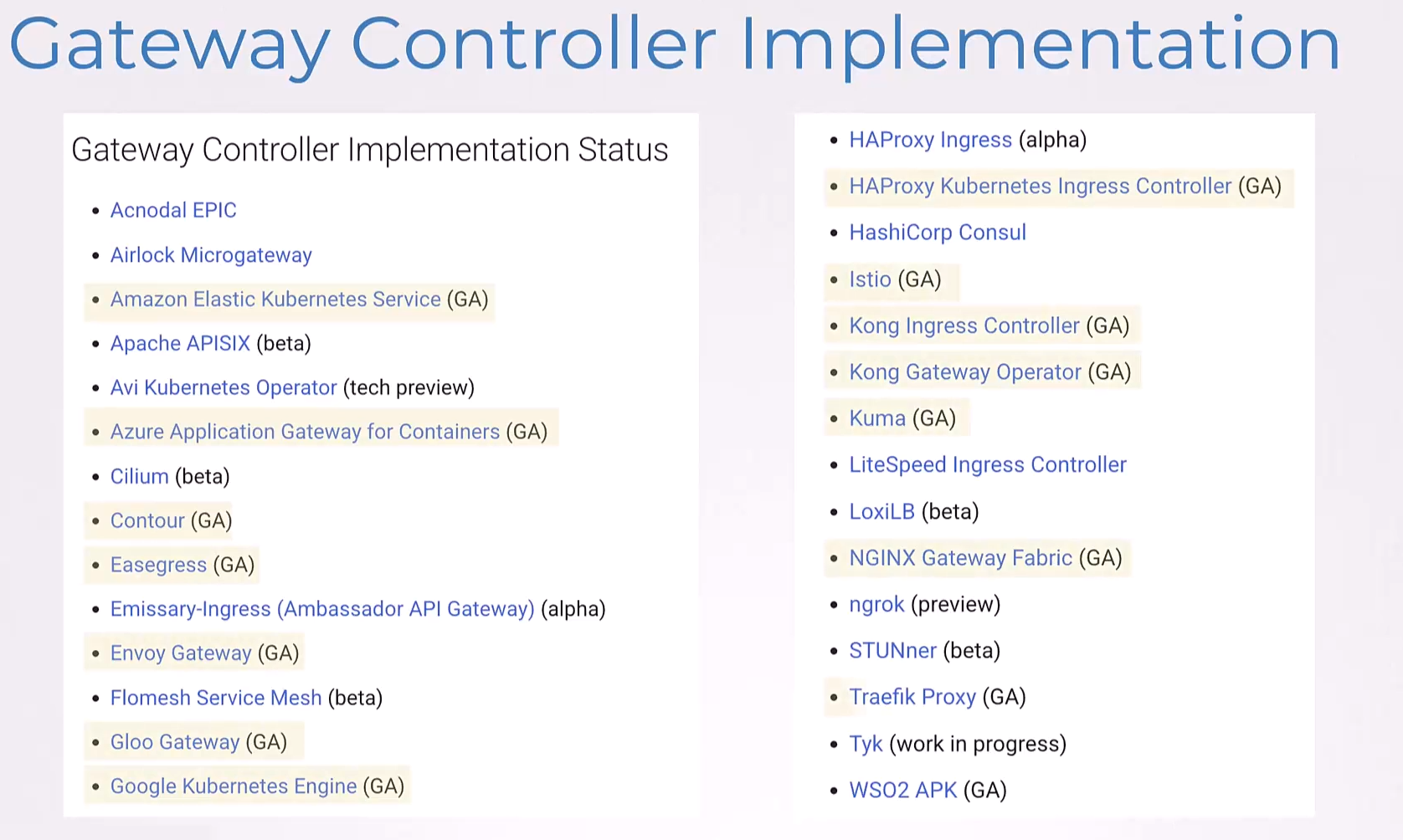

So, speaking about Gateway API implementation,

So, speaking about Gateway API implementation,

most controllers have now implemented Gateway API,

or are on the way to implement it.

Amazon EKS, Azure Application Gateway for Containers,

Contour, Easegress, Envoy Gateway, Google Kubernetes Engine,

HAProxy Kubernetes Ingress Controller, Istio, Kong, Kuma,

NGINX Gateway Fabric, Traefik Proxy are already GA

with the implementation and others are on their way.

Well, let’s off for this lecture, head over to the labs

and practice working with Gateway API controllers.