we will see how Kubernetes is configured to use these network plugins.

As we discussed in the prerequisite lecture, CNI defines the responsibilities of container runtime.

CNI specification

As per CNI, container runtimes,

in our case, Kubernetes is responsible for creating container network namespace, identifying and attaching those namespace to the right network by calling the right network plugin.

in our case, Kubernetes is responsible for creating container network namespace, identifying and attaching those namespace to the right network by calling the right network plugin.

So where do we specify the CNI plugins for Kubernetes to use?

The CNI plugin must be invoked by the component

within Kubernetes that is responsible

for creating containers

because that component must then invoke

the appropriate network plugin

after the container is created.

Instructor 2: The component that is responsible

for creating containers is the container runtime.

The two good examples are Containerd and CRI-O,

not that Docker was the original container runtime,

which was later replaced by an abstraction

called Containerd, which we kind of explained

in the beginning of this course.

Now, we discussed earlier

that there are many network plugins available today.

that there are many network plugins available today.

How do you configure these container runtimes

to use a particular plugin?

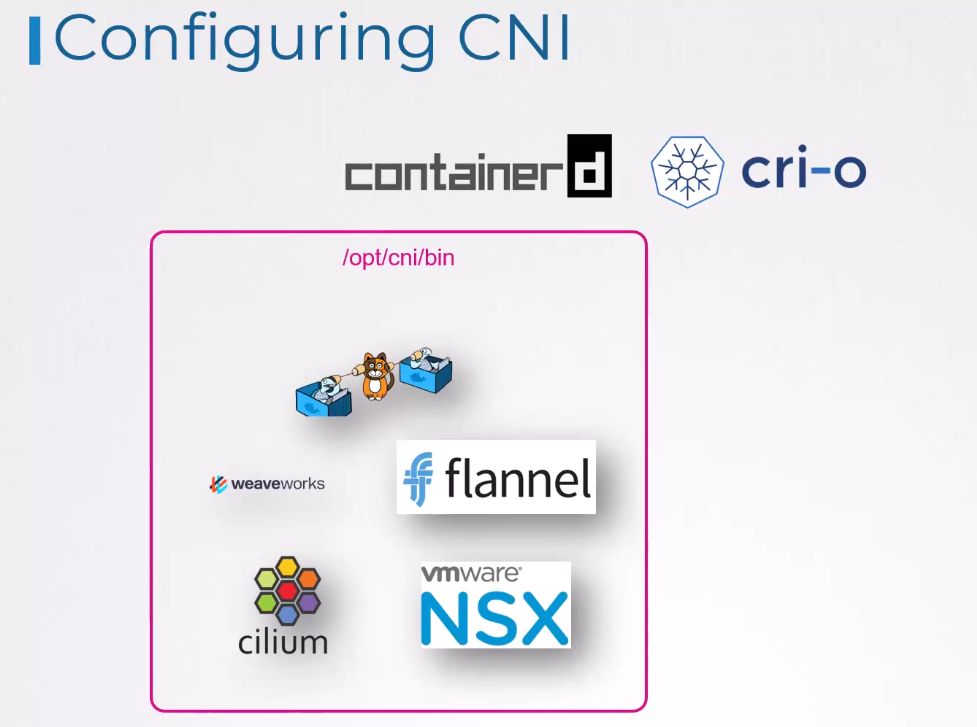

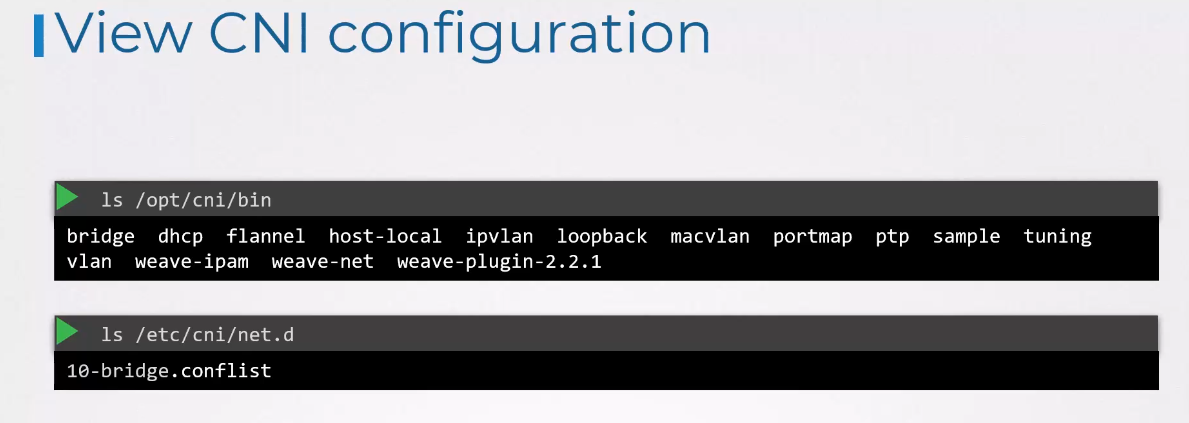

First of all, the network plugins are all installed

in the directory /opt/cni/bin.

So that’s where the container runtimes find the plugins.

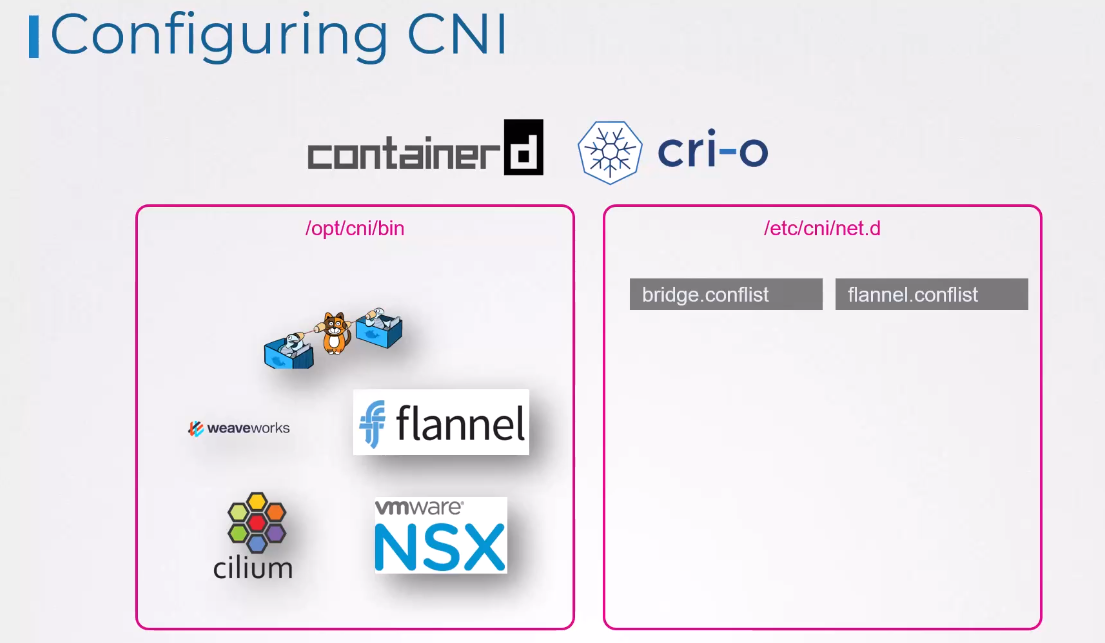

But which plugin to use

and how to use it is configured

in the directory /etc/cni/net.d.

There may be multiple configuration files in this directory

that’s responsible for configuring each plugin.

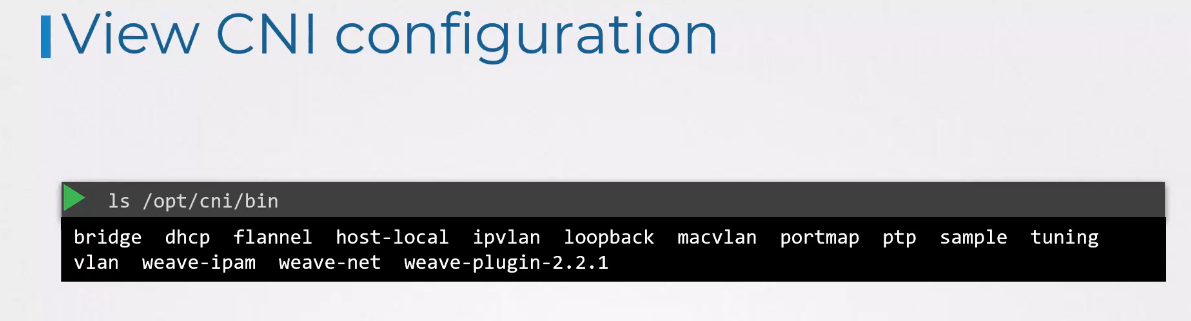

If you look at the cni/bin directory,

you’ll see that it has all the supported CNI plugins

you’ll see that it has all the supported CNI plugins

as executables, such as the bridge,

DHCP, flannel, et cetera.

The CNI Config directory has a set of configuration files.

This is where container runtime looks

This is where container runtime looks

to find out which plugin needs to be used.

In this case, it finds the bridge configuration file.

If there are multiple files here,

it will choose the one in alphabetical order.

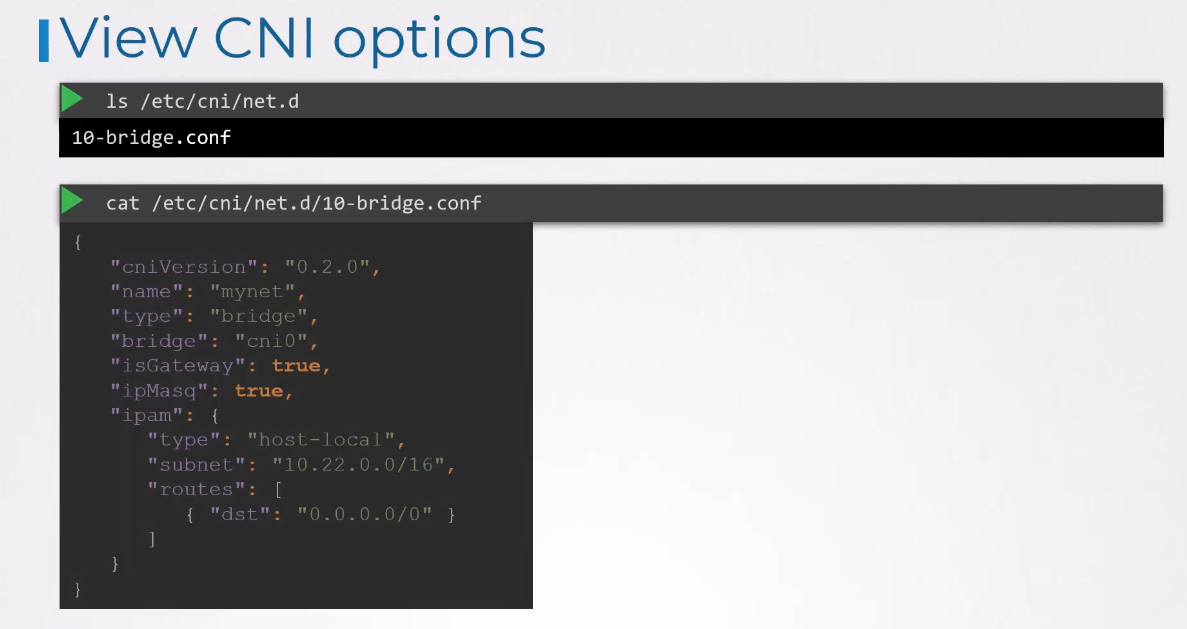

If you look at the bridge con file, it looks like this.

This is a format defined by the CNI standard

for a plugin configuration file.

Its name is “mynet”, type is “bridge”.

It also has a set of other configurations

which we can relate to the concepts

we discussed in the prerequisite lectures

on bridging, routing, and masquerading in NAT.

D is gateway defines whether the bridge interface

should get an IP address assigned

so it can act as a gateway.

The IP masquerade defines

if a NAT rule should be added for IP masquerading.

The IPAM section defines IPAM configuration.

This is where you specify the subnet

or range of IP addresses

that will be assigned to pods and any necessary routes.

The type host local indicates

that the IP addresses are managed locally on this host.

Unlike a DHCP server, maintaining it remotely,

the type can also be set to DHCP

to configure an external DHCP server.

Well, that’s it for this lecture.

Head over to the practice exercises

and practice working with CNI and Kubernetes.